In this article, we’re going to explore how experiments can improve Marketing Mix Modelling (MMM) at Objective Platform. To get the best results from your marketing measurement, these methods need to become part of your everyday workflow and overall company culture – not just standalone tools. For this to work well, you'll need to rethink how you measure success by questioning and testing your existing hypotheses. We’ll focus on how to use experiments to validate your assumptions and will also share some examples to show how this works in real situations.

Experiments in marketing are essential for validating the effectiveness of different strategies and channels. Common methods include A/B lift tests and geo-lift studies, which measure the impact of a specific media channel or tactic by comparing a control group with a test group. These experiments can reveal the incremental value of a media channel. For instance, marketers might increase or decrease investment in certain regions while keeping it constant in others. They can then compare the KPI values between these regions to observe performance changes.

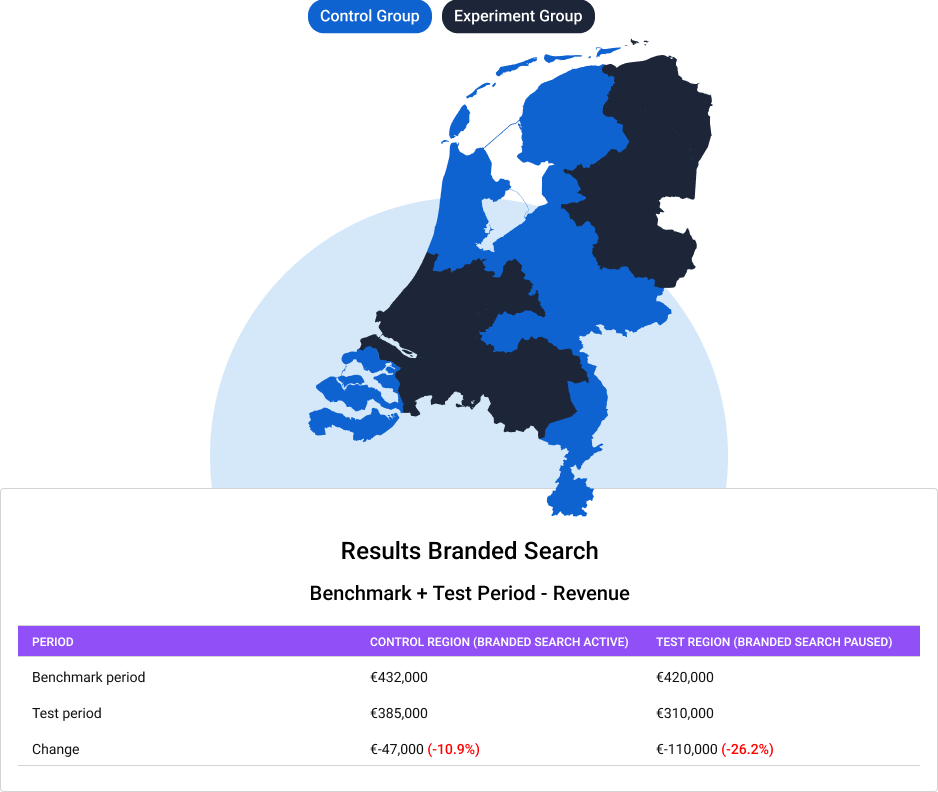

A prime example is a geo-lift study conducted by a telecom provider to test the effectiveness of their Branded Search campaigns for sim-only keywords. In this study, the telecom provider divided the Netherlands into test and control regions. In the control regions, Branded Search campaigns remained active. In the test regions, these campaigns were paused during the test period. By comparing the performance across these regions, the telecom provider aimed to understand the incremental impact of their Branded Search campaigns on overall sales and engagement.

If Branded Search drives truly incremental revenue (rather than merely capturing conversions that would occur anyway), then pausing it in selected regions will lead to a measurable decline in revenue.

This channel often performs well in attribution tools but without isolating it from other media, it is difficult to know how much value it truly adds.

Geo-lift testing splits regions into two groups:

All other media activity continued as usual, creating clean conditions to observe what happened when one channel was removed. The primary KPI was revenue, chosen to measure direct business impact. Cost efficiency and ROAS were evaluated separately.

The results revealed a clear difference between the test and control regions:

The test regions experienced a significantly steeper decline (26.2% vs. 10.9%), demonstrating that Branded Search was protecting revenue that would otherwise be lost. This strong signal confirmed the channel was driving incremental value, not just cannibalising other traffic.

The experiment confirmed the hypothesis: Branded Search contributed real, incremental revenue. With this insight, the provider could justify continued investment in this channel. Importantly, they could feed this evidence back into their MMM to improve future scenario planning and budget allocation. While many marketing teams debate whether certain channels are over-credited, this kind of geo-lift testing provides a clear, objective view of what is actually working.

At Objective Platform, we encourage clients to integrate experimentation directly into their MMM workflow. A well-designed experiment:

Experiments like these don’t just measure performance; they shape smarter strategy.

The takeaway: Geo-lift tests don’t just measure – they clarify. By isolating the impact of a single tactic, you can make more confident media decisions and build a Marketing Mix Model grounded in real-world behaviour.